Here are some of my random personal projects

A 3D music exploration / discovery tool written in Godot 4. I used an audio tagging model based on the AudioSet dataset EfficientAT which allows me to score audio/music into 520 different categories. Based on these analyzed values, I implemented a 3D cube which plots each song amongst large playlists on 3 user-chosen axes. This allows the user to visualize and explore music according to whichever criteria they have.

Click here for downloads / links

Click here to see gallery

Kinect Visualizer

My magnus opus. A feature-rich Kinect visualizer utilizing the raw depth images of a Kinect and attributing them colors based on various characteristics. Some of the functionalities include:

- recording and playing back raw sensor data

- music variation

- many inputs (xbox controller, MIDI, accelerometer, etc...)

- realtime ray-tracing

- augmented reality (by streaming to phone & google cardboard)

- setting and saving presets

Technologies Used

This project was written in C++ and uses the following libraries- Freenect (raw kinect capture)

- OpenCV (framebuffer arithmetic)

- Embree (raytracing)

- GStreamer (live streaming)

Click here to see sub-projects (click on most images and videos to see higher quality versions)

Early Beginnings (First recordings)

Having recently found an old Kinect for xbox 360 my family used to have, This is an interesting device as it includes a depth sensor and full color camera. Wanting to get a feel for what this depth sensor was capable of, I found a c++ library Freenect that allowed me to capture the raw depth images. These are captured as a grayscale 640x480 image where each pixel corresponds to its depth.

I first started by estimating the surface normals of each pixel by looking two of its neighbors and interpreting those 3 points as the 3 points of a triangle. Unfortunately, the kinect 360 lacks in spatial coherency and introduces a lot of noise. It is while trying to reduce this noise that this entire project came about.

Better controls (Experimenting with other input controls)

Once I finally had a "pixel shader" I was happy with, I wanted better ways to be able to quickly experiment. This lead me to implementing controls through a web interface, xbox controller, midi keyboard, DJ midi controller, and my phone.

Blender (Using Blender scenes rendered as input)

Since I had some experience with Blender, I thought about creating a material in blender that simply renders the scene using only depth values. I then take a whole recording and feed it to my Kinect software as if it was input from the Kinect.

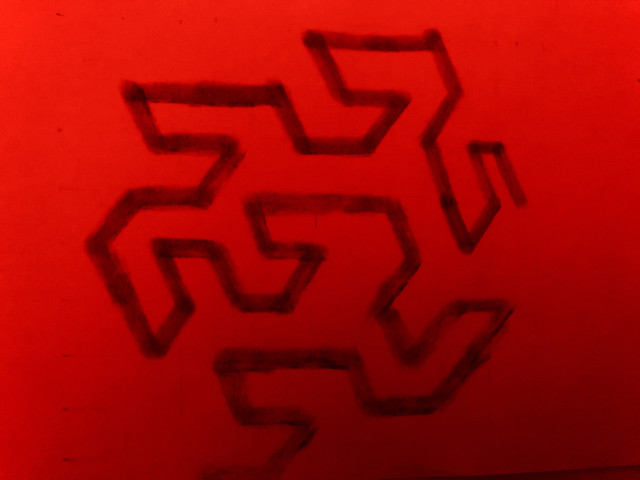

Raytracing (Integrating 3D models by ray-tracing with Embree)

I then started experimenting with rendering .obj meshes using embree and blending that into the raw depth stream generated by the Kinect. This allows me to add static meshes to a live scene and manipulate the object with an xbox controller

Kinect v2 (Experiments with a Kinect for Xbox One)

Spending this entire time trying to compensate for the high noise of the Kinect for xbox 360 I sought out a used Kinect for Xbox One, having heard the quality was supposed to be much better. These are the results of these experiments...

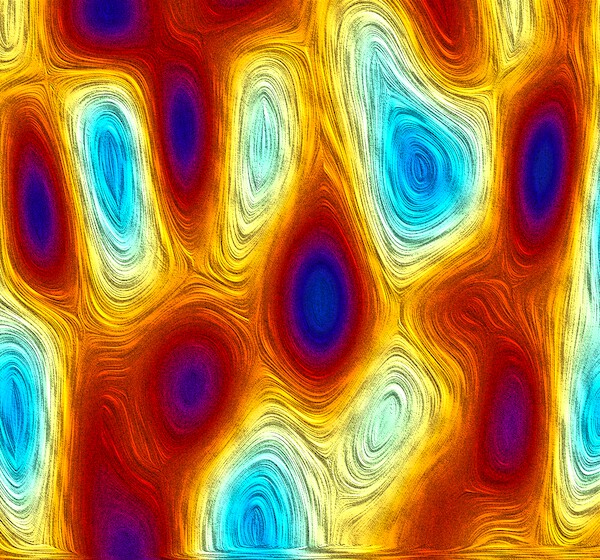

Abstract experiments (Using geometric blender output as source material)

I then moved on to generating abstract geometric animations in Blender and again exporting those as depth maps to be read by this software.

Weather-c

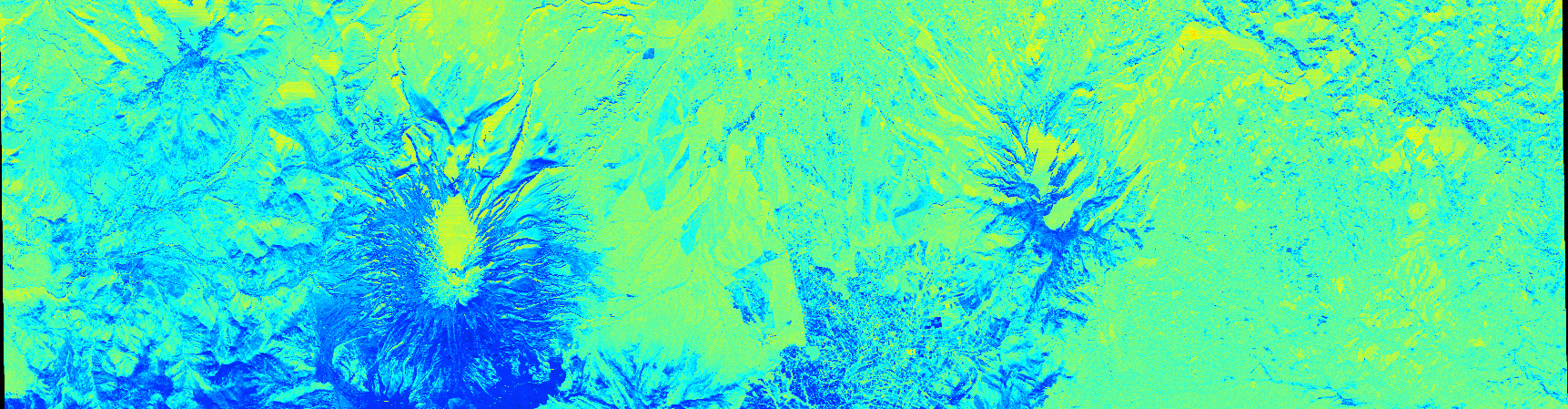

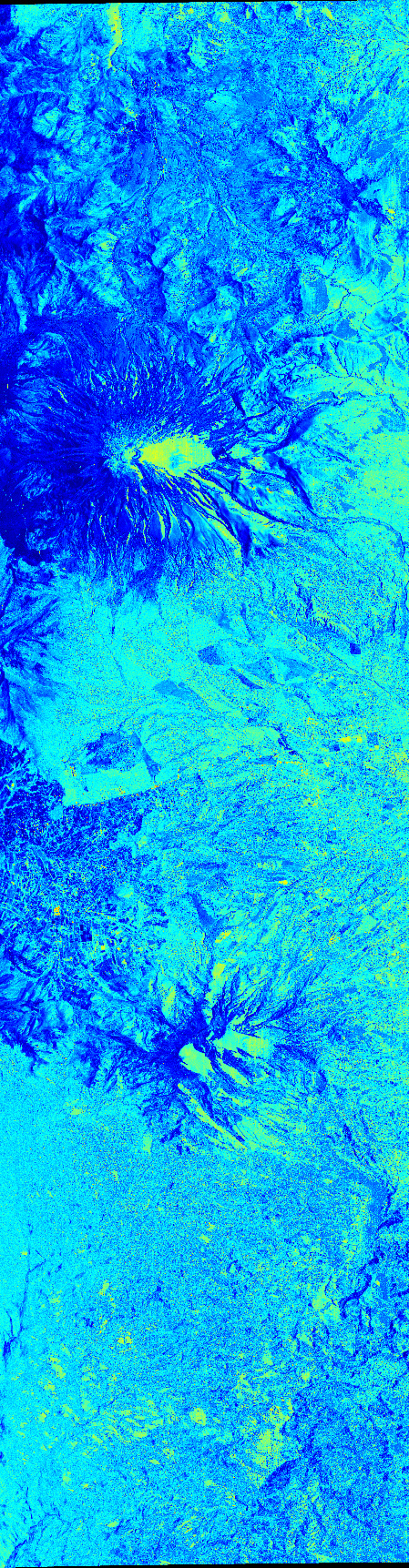

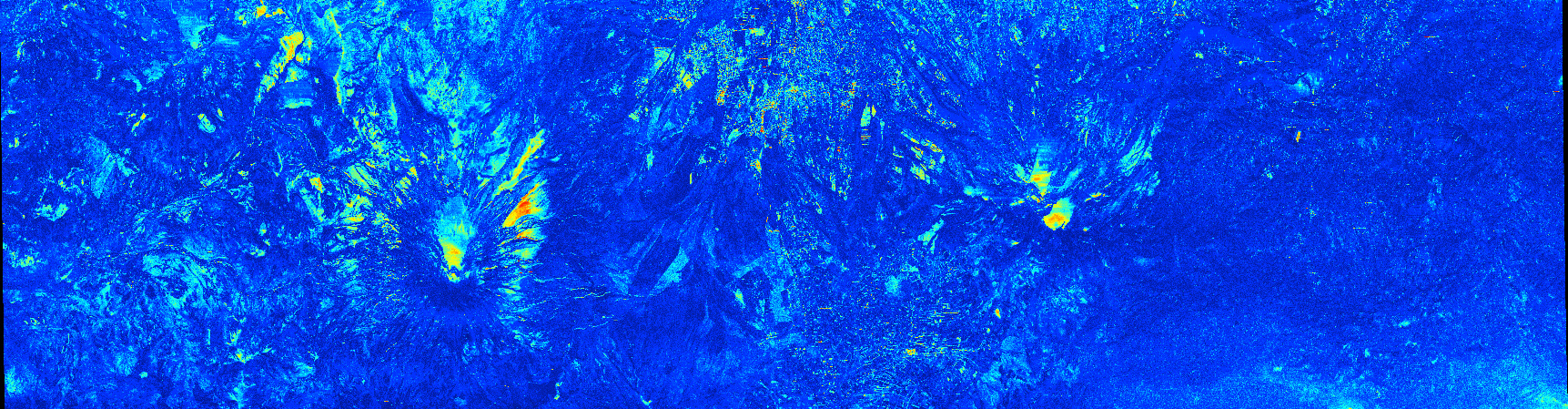

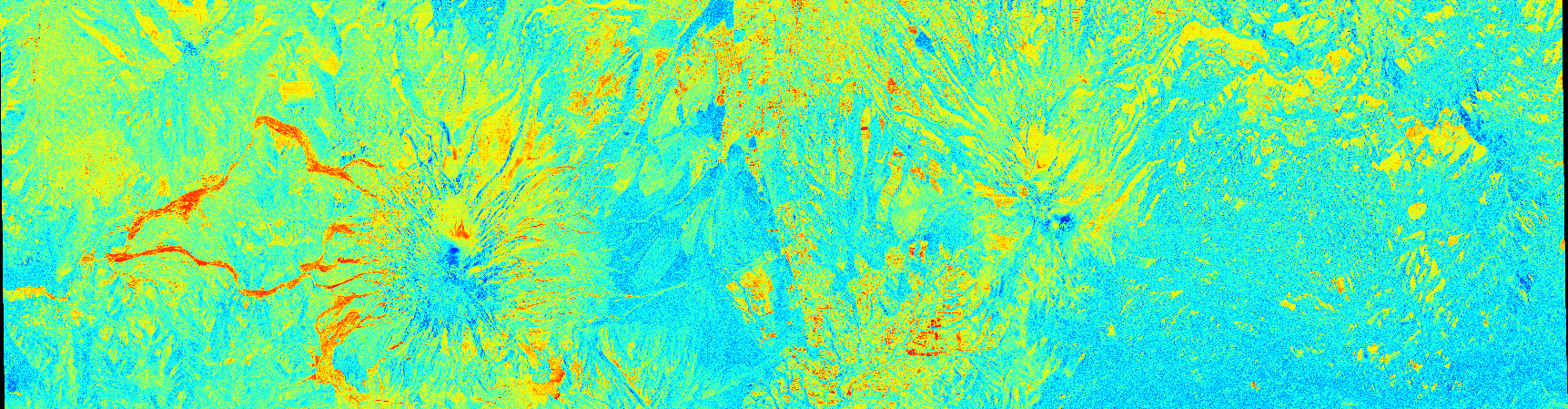

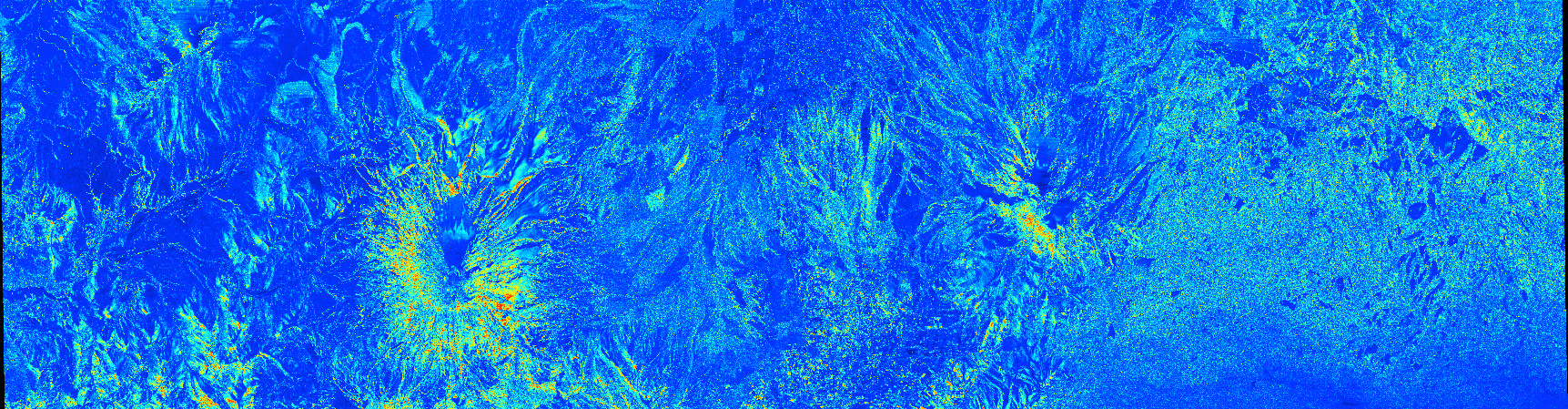

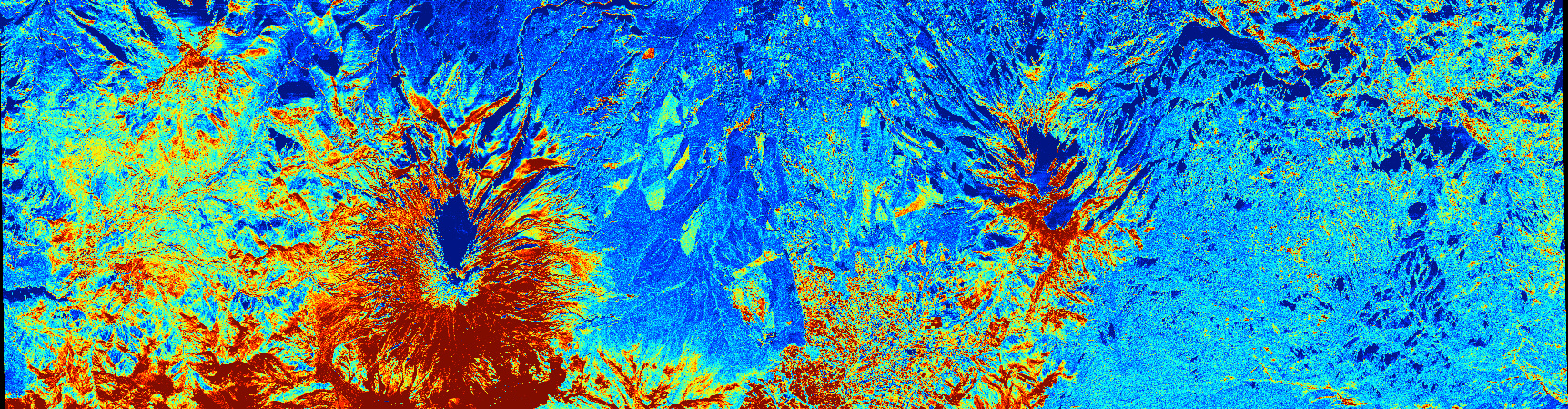

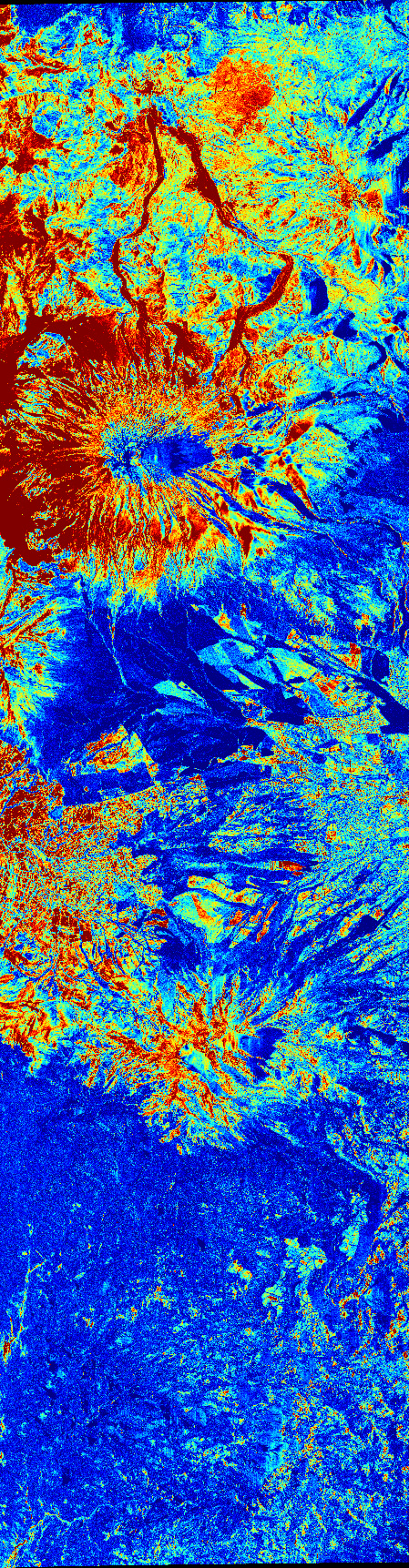

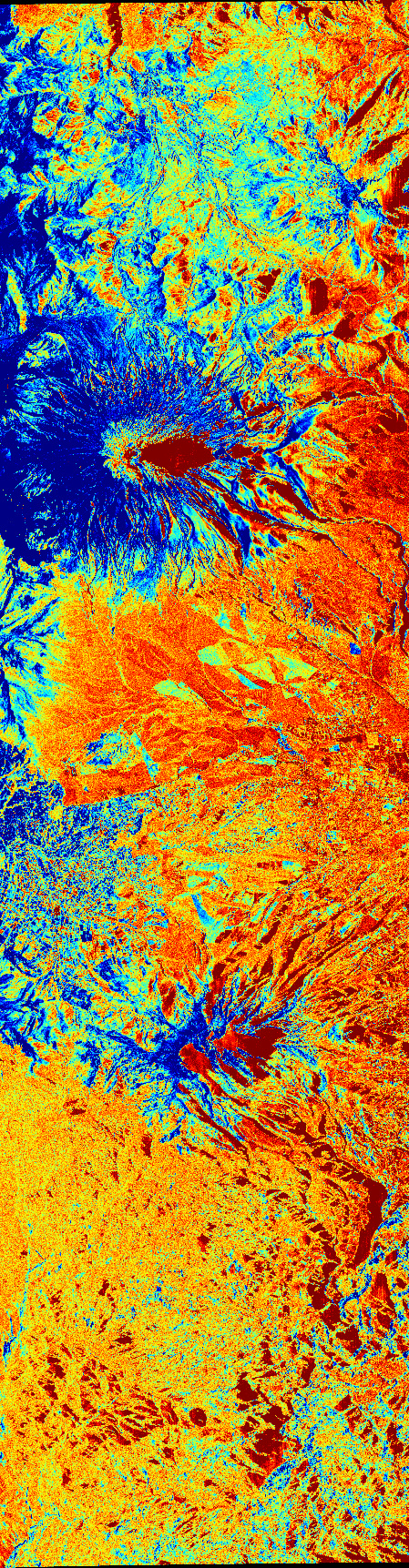

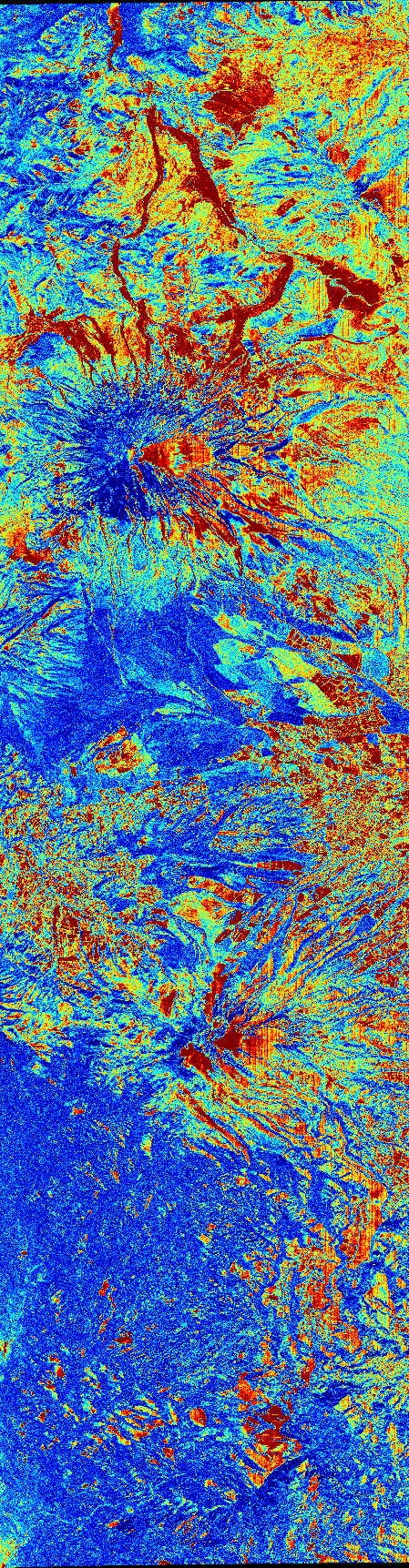

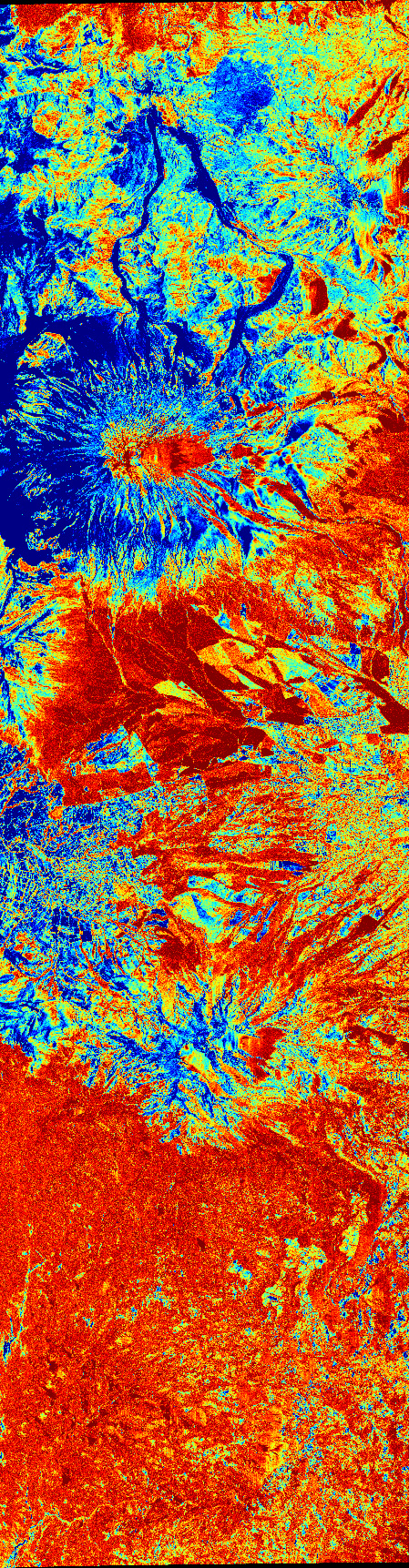

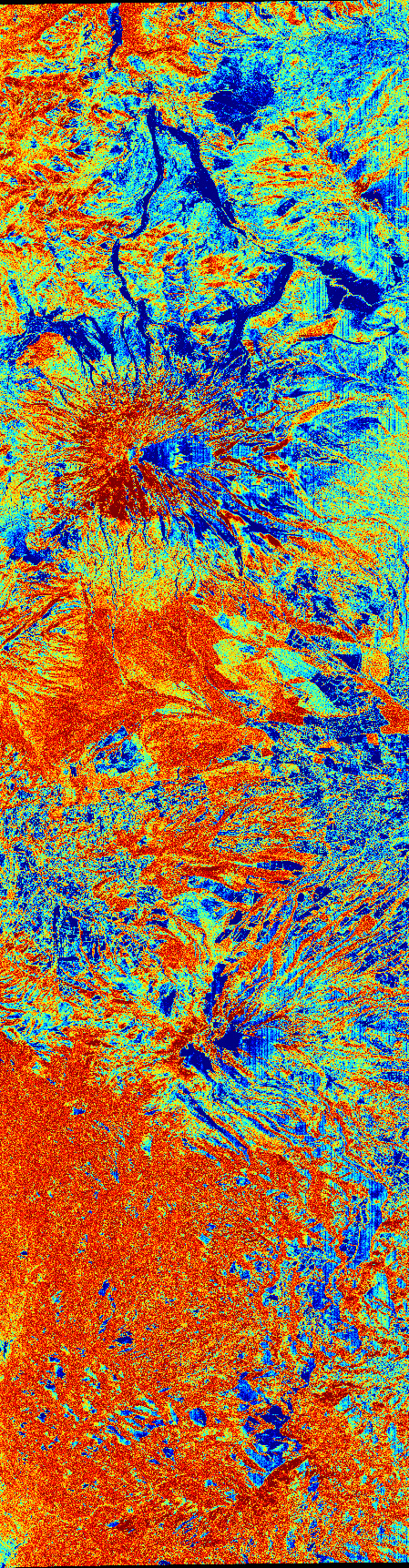

Some climate visualizations I made from a variety of climate forecasting and reanalysis datasets coming from europe's ERA5 and the US's NOAA. I started out by downloading any random files I could find and randomly plotting variables to see what would happen. This then grew to generating smooth timelapses of how these variables behave over time.

Click here for more info

Most of these forecasts are published as .grib2 files. First using pygrib but then moving on to ECMFW's proper ecCodes library to decode these files, I started extracting the raw 2D values in a time series and using ffmpeg to attribute colors and then perform motion interpolation.

Links to data sources

-

ERA5 hourly climate reanalysis - historical hourly climate reanalysis (medium resolution) [world]

-

Arome (Meteo-France) - french weather service's climate forecasting (high res) [france and europe]

-

Arpege (Meteo-France) - french weather service's cliamte forecasting (medium res) [world]

-

NOAA's GFS - global forecast system from NOAA [world]

-

NOAA's HRRR - high resolution rapid refresh climate forecasting (has subhourly too) [US only]

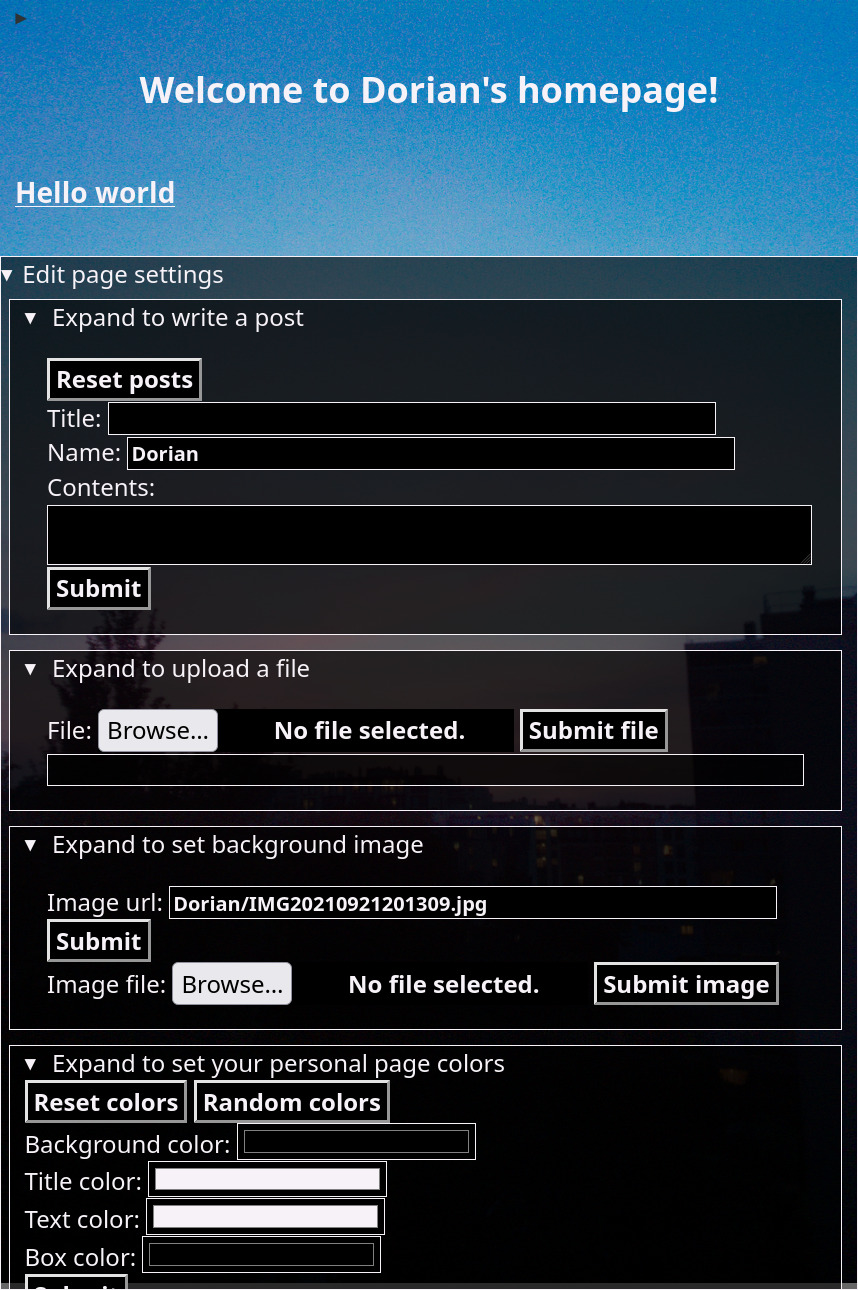

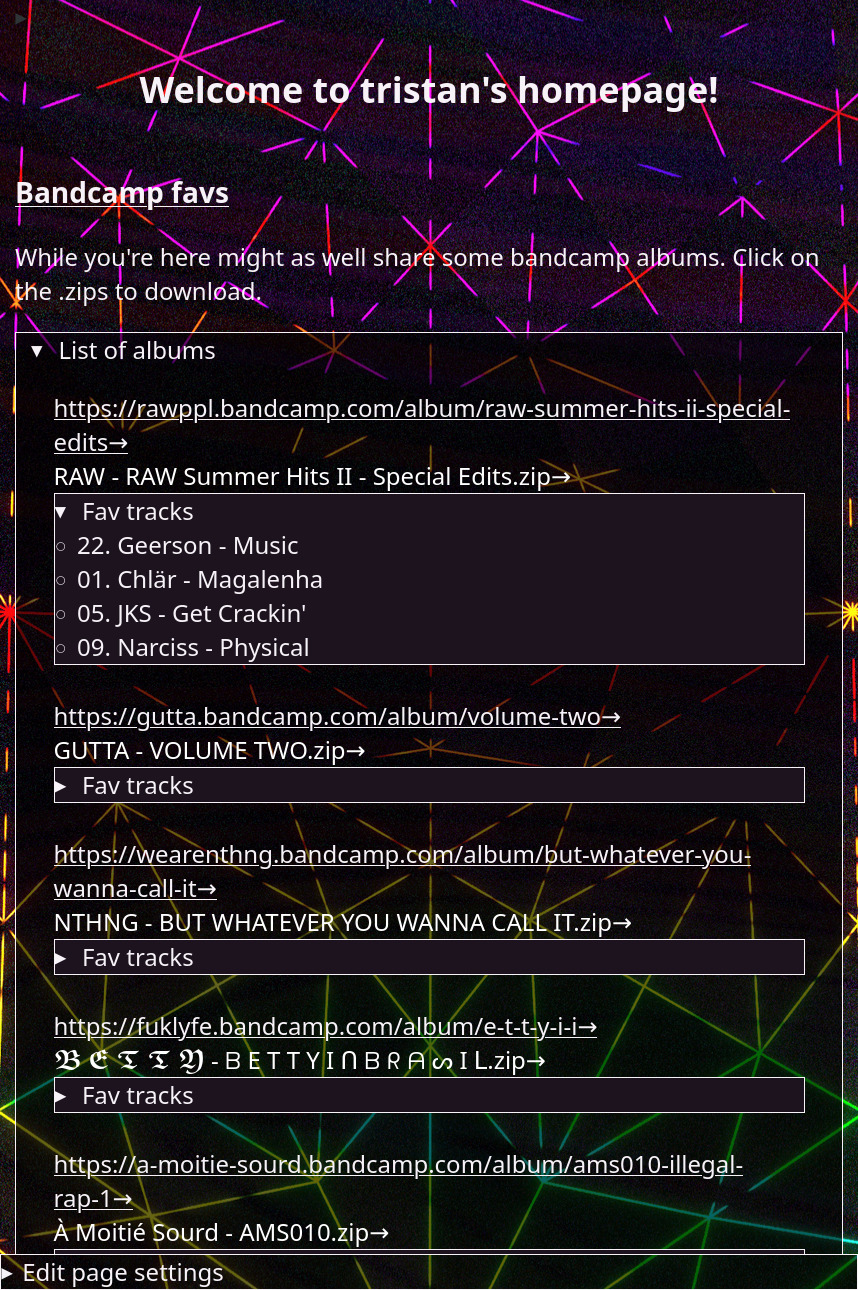

A hand-made social media site static generator and server written only using Python3's standard library. Github code is right here.

Click here for more info

The user can perform many tasks from the site including creating a profile, customizing the background image and colors of their profile, upload files or images to their profile and creating posts under their profile.

Kinect, AR, and ProjectM

Early projects related to the Kinect 360 and scanning in a mesh of my living room. As well as some projectM visualizations.

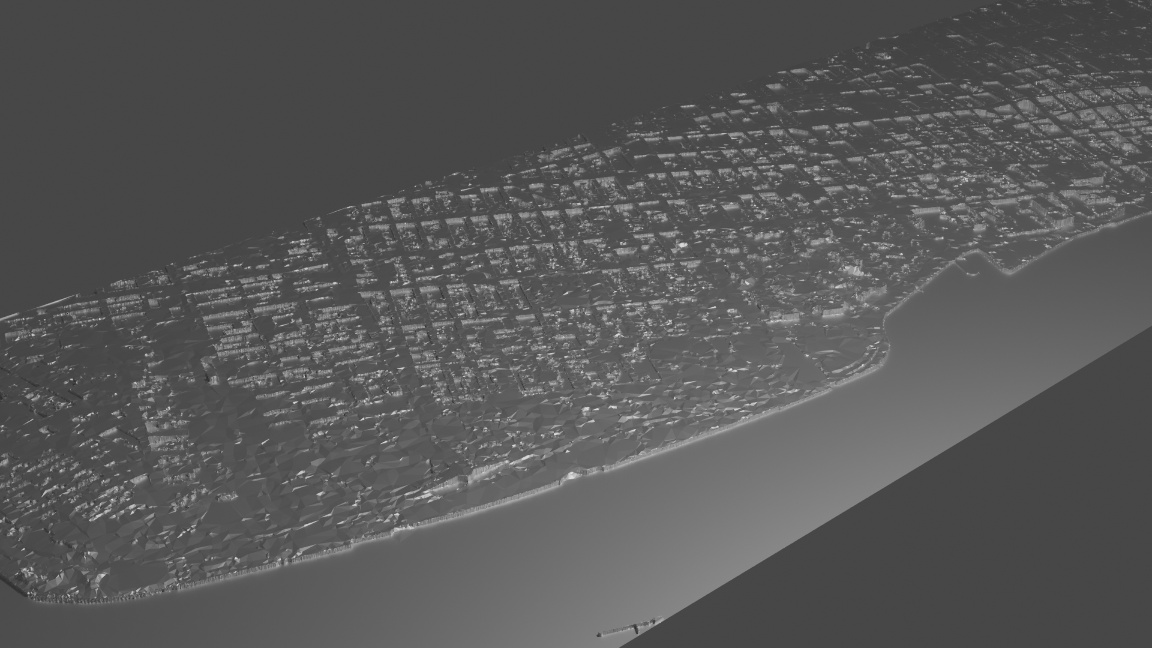

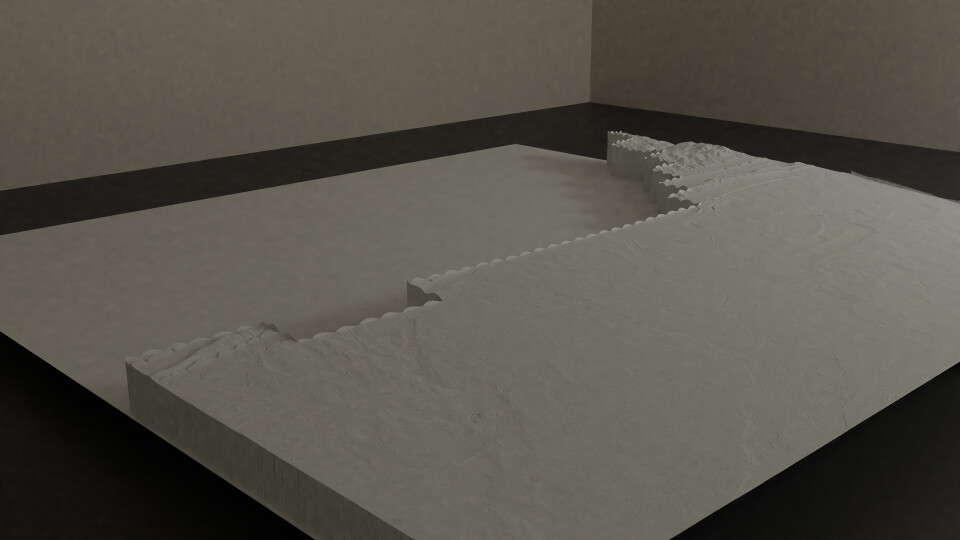

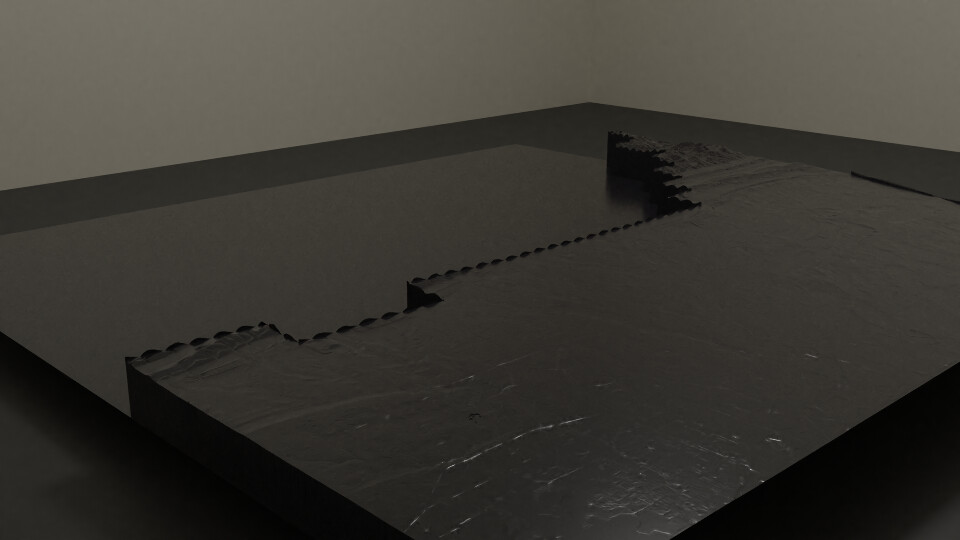

Kinect (Room scans with Kinect for xbox 360)

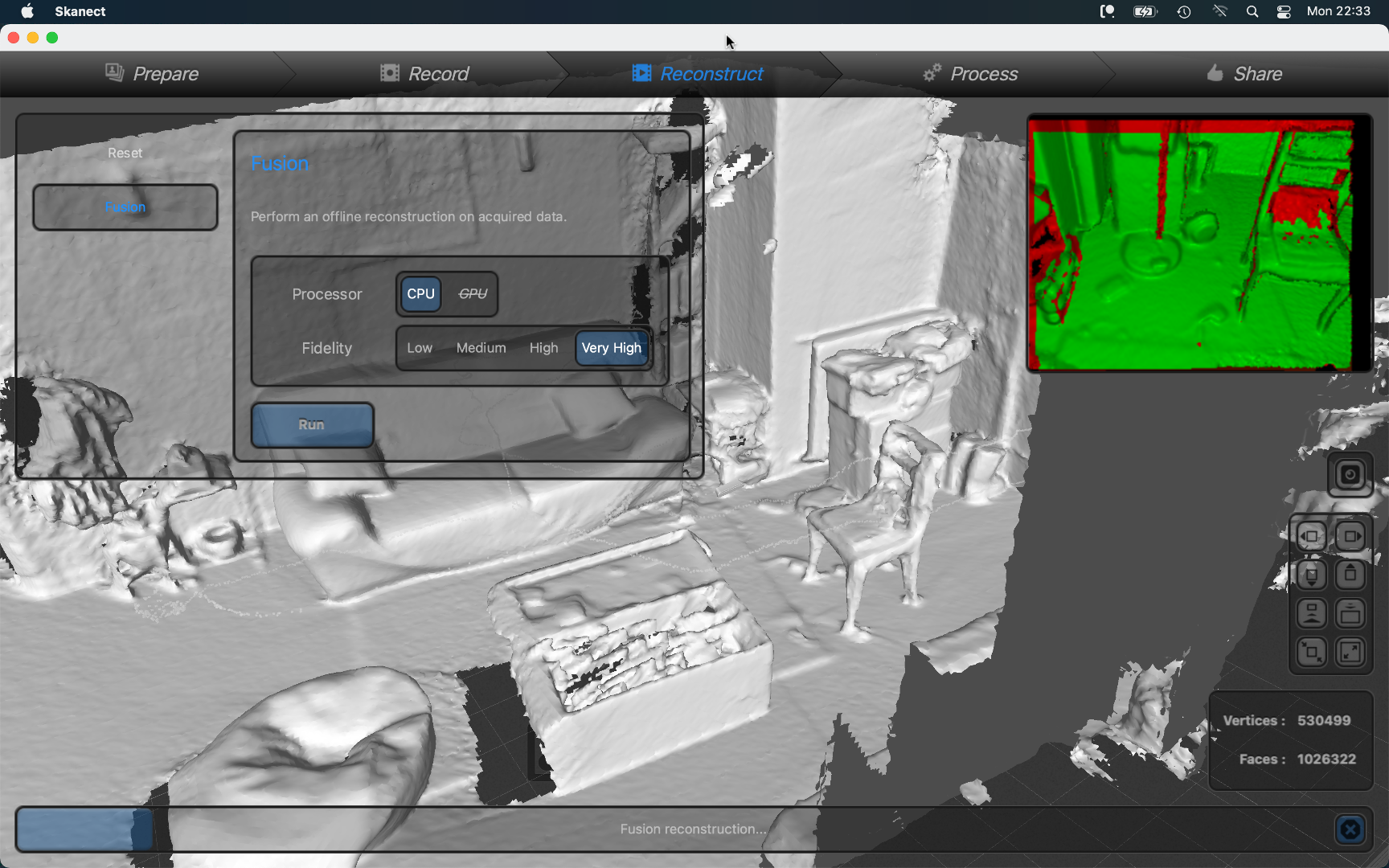

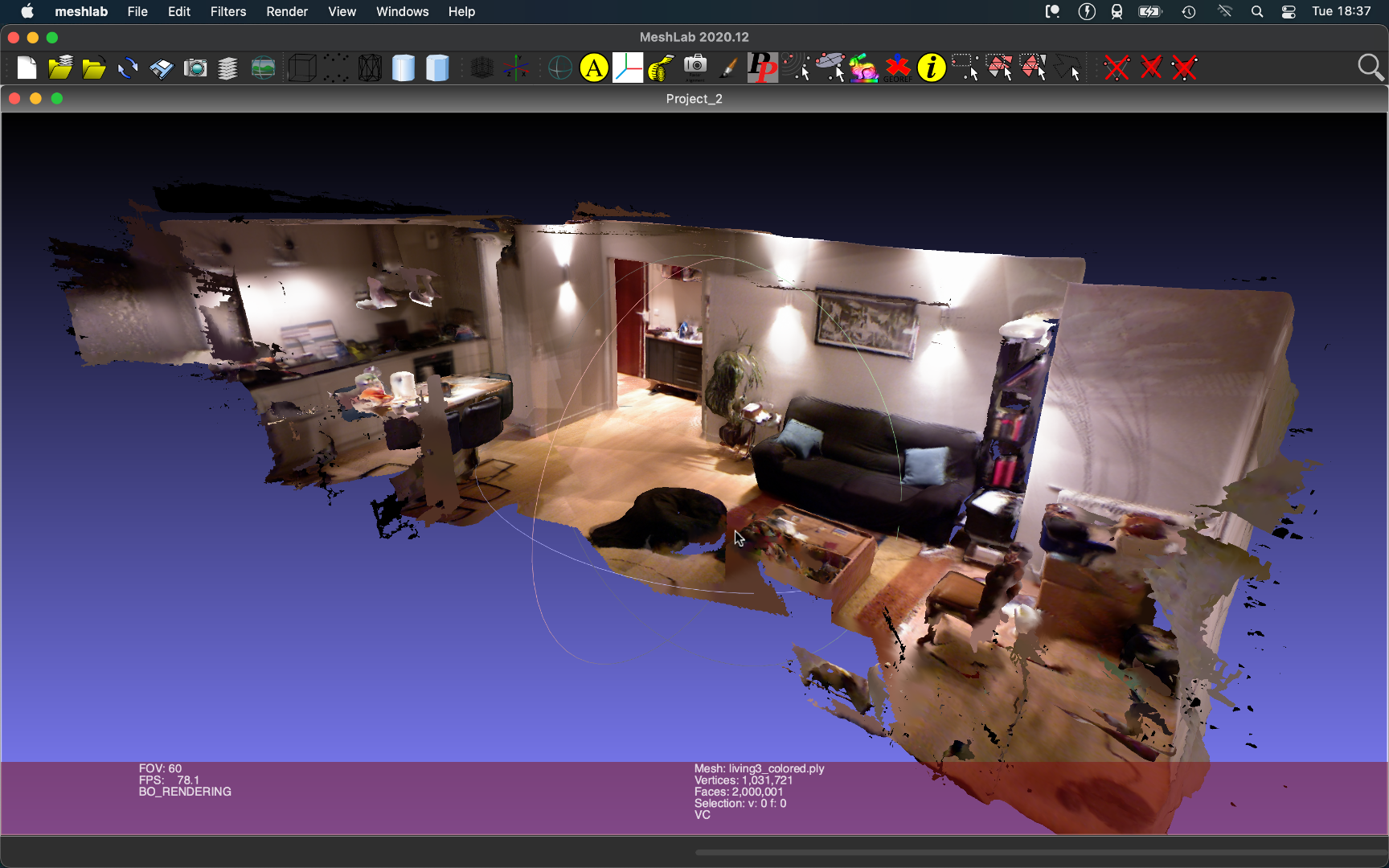

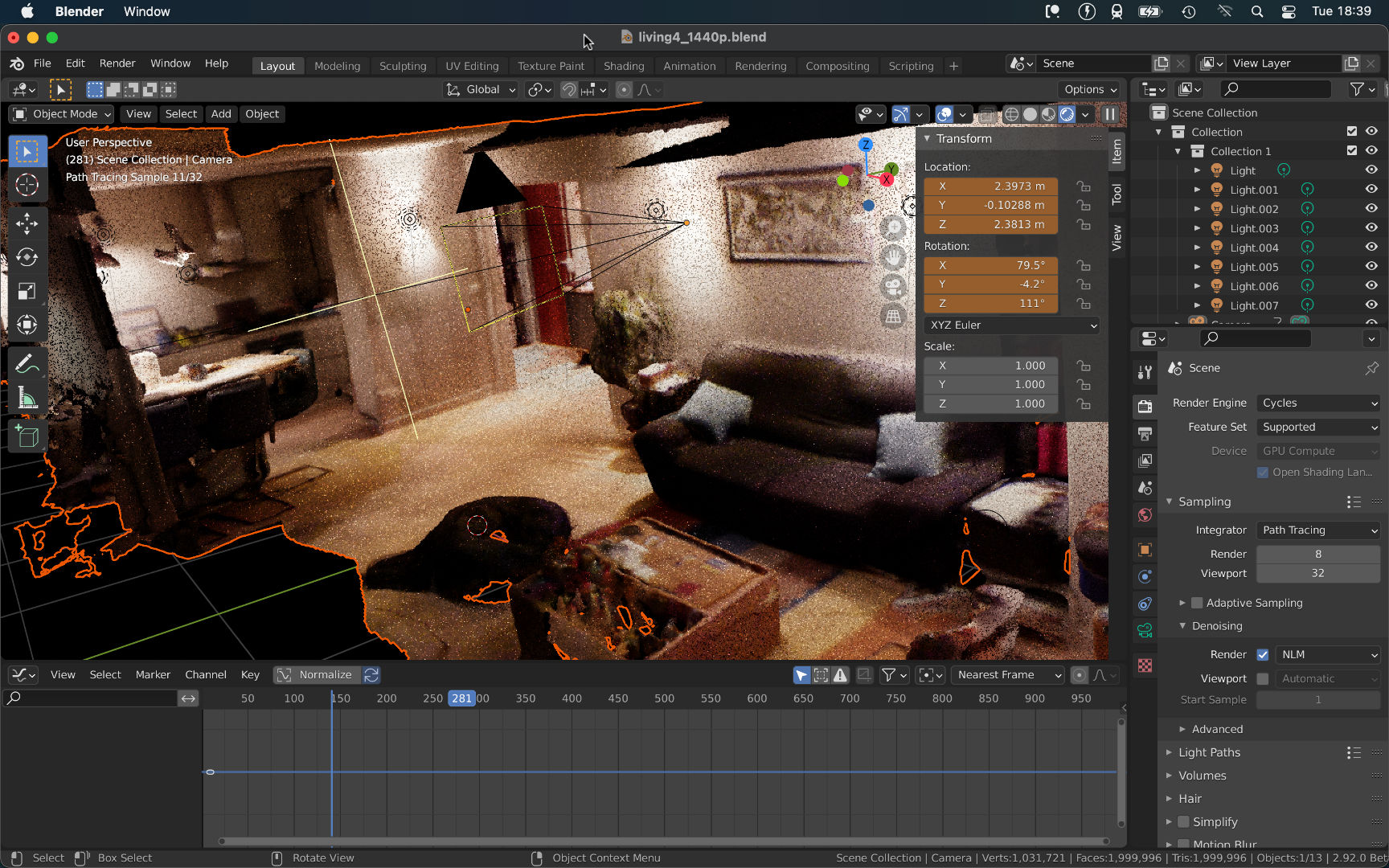

Found an old Kinect for the xbox 360 my family used to have. Since I've been into Lidar recently I looked up what all I could do with it. Found a software called Skanect that was made for the purpose of generating meshes from real world scenes using the Kinect's depth sensor and even coloring them using its 480p color camera. So with mixed results and a lot of patience I was able to make scans of my living room and my bedroom.

Rendered results

Visualizations (Using ProjectM to generate visuals for whatever audio is playing)

Goes back to this winter. This open source project lets you run old MilkDrop shaders (or any HLSL or GLSL shader) on modern hardware. Ended up modifying it here to allow me to capture raw frames instead of having to screen record the window which was expensive in terms of computation, memory, and visual quality. As well as ease of use. So I messed around in it for a while and decided to produce some videos.

pt. 1

-

Which I then turned into

-

and

-

which became

Rendered results

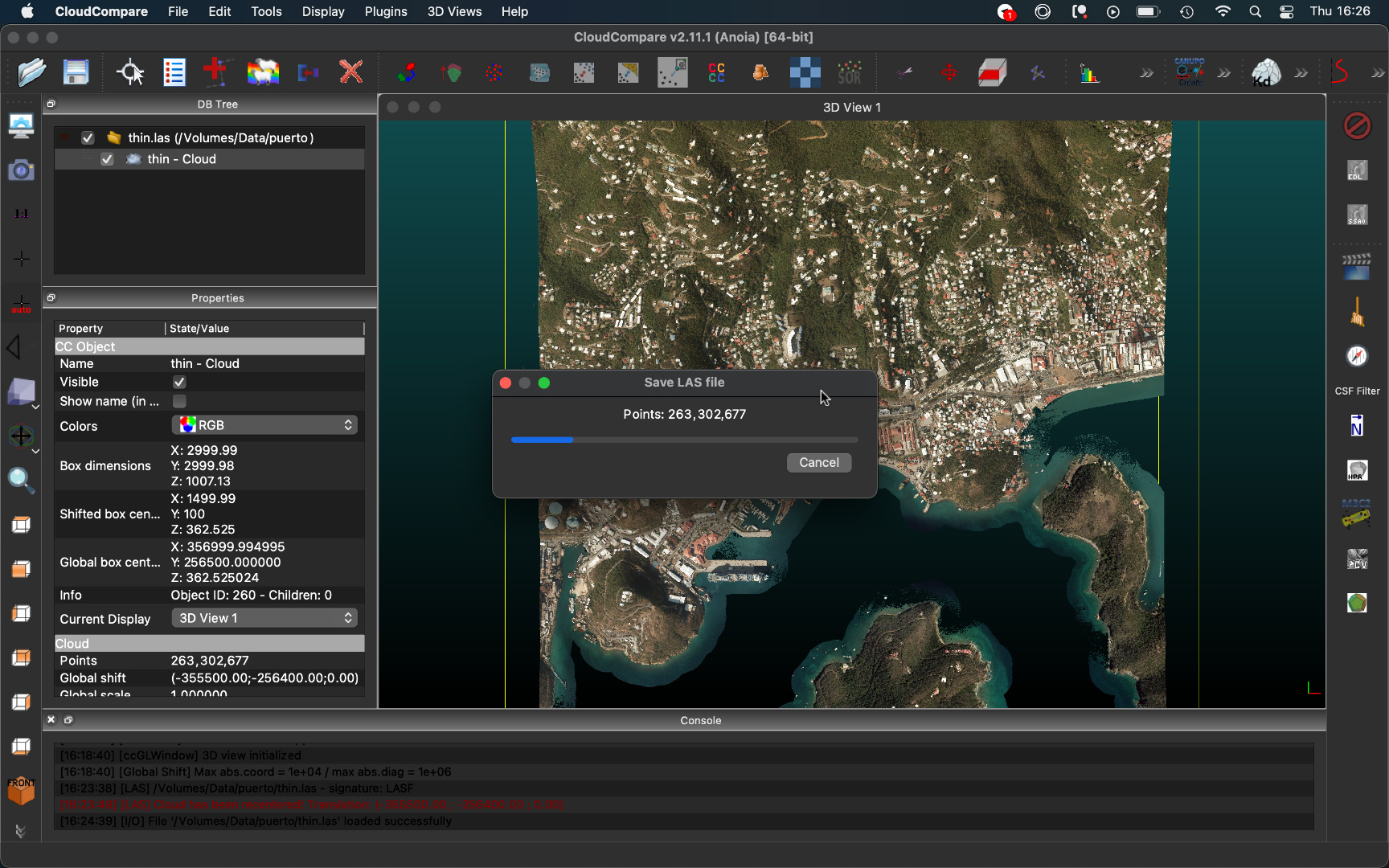

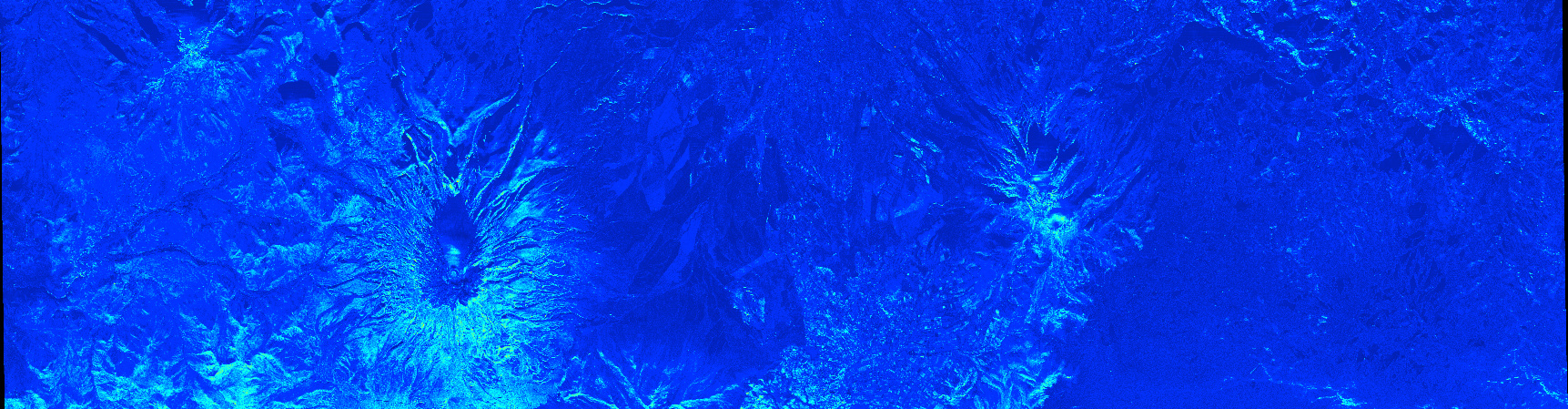

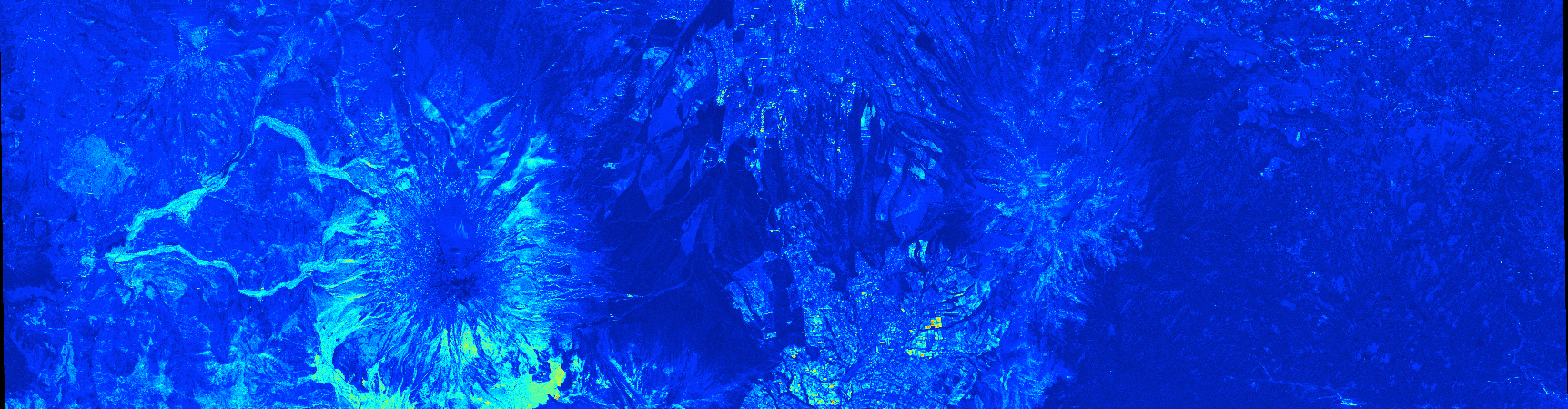

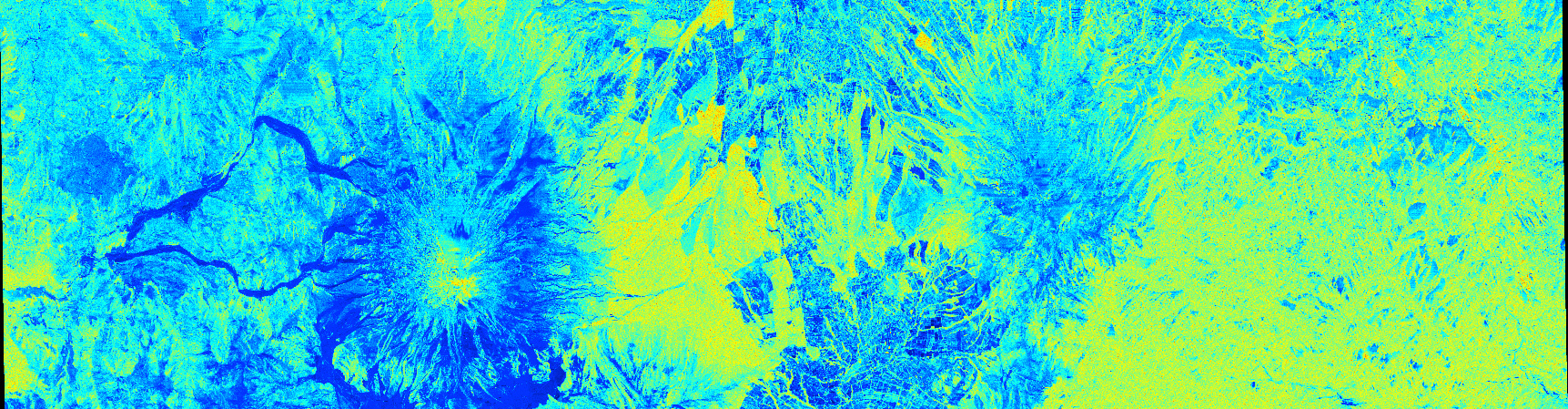

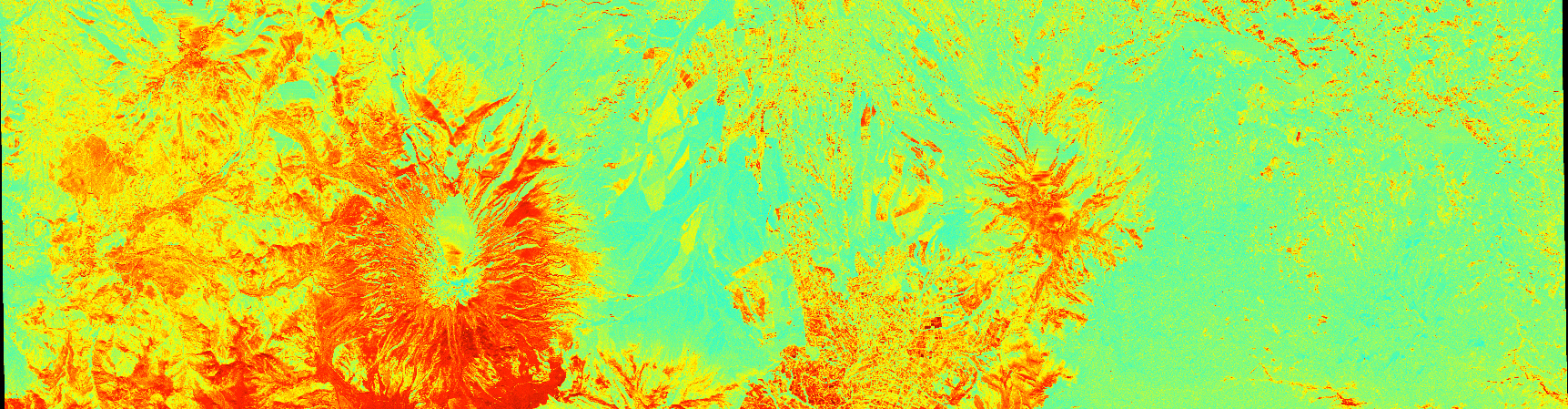

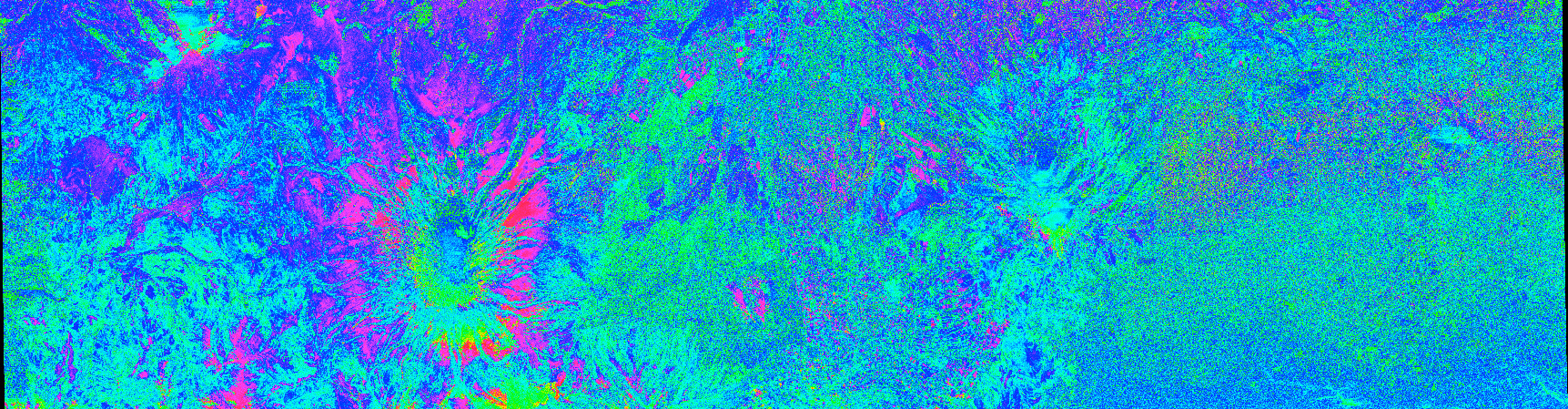

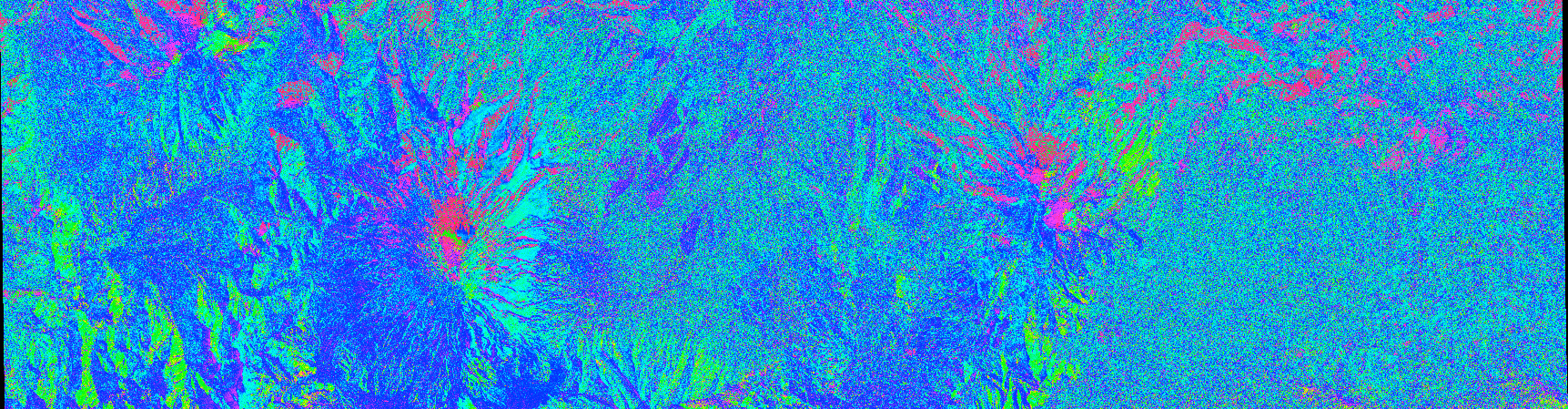

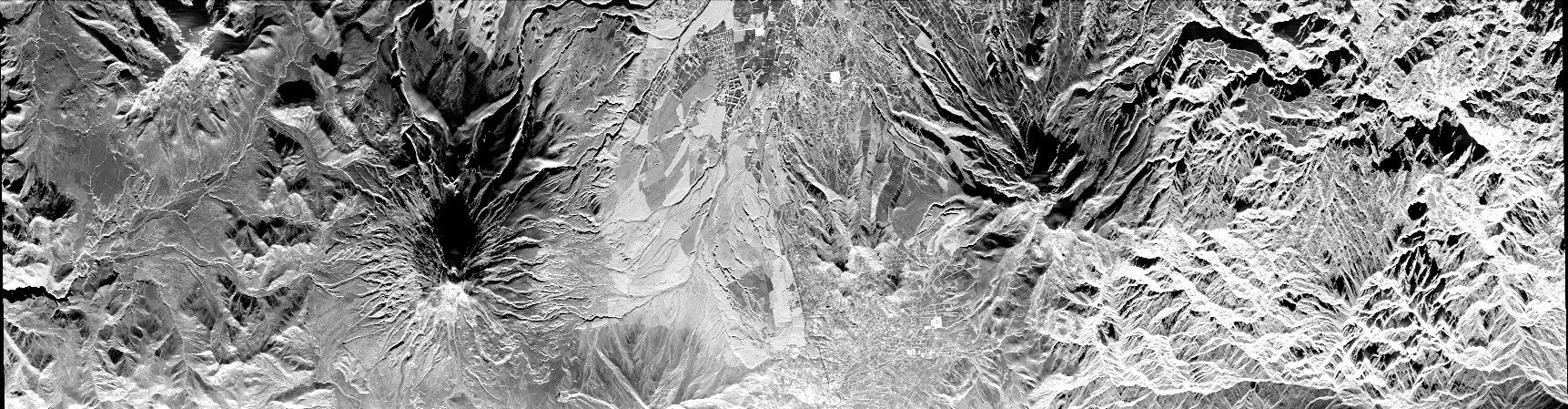

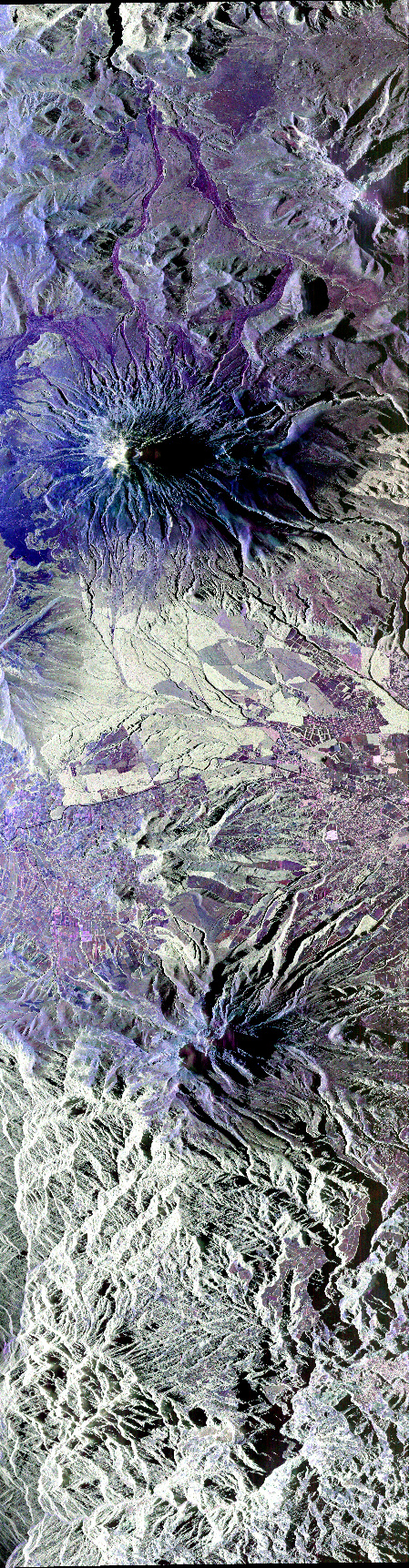

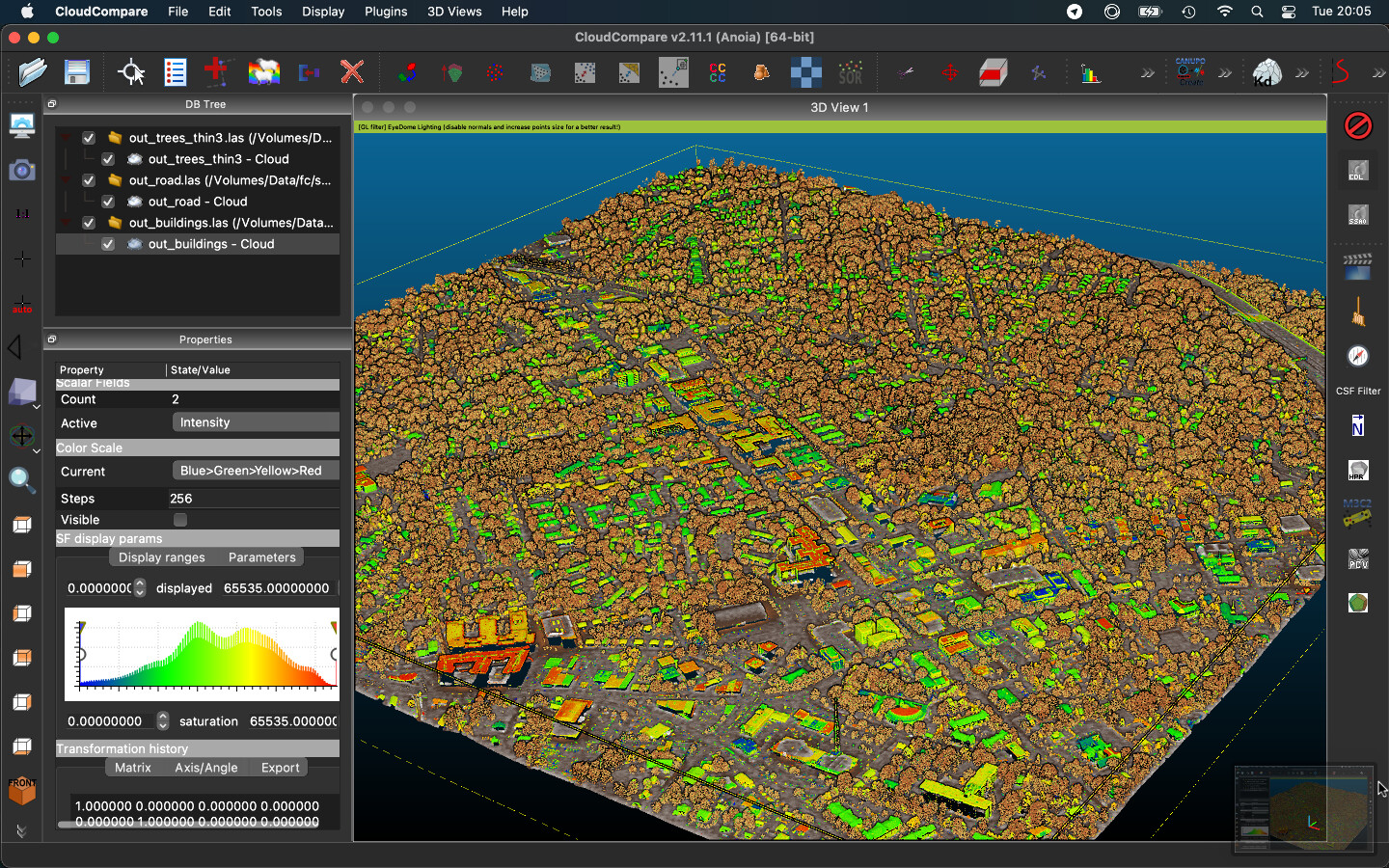

Lidar, DEM, and SAR

Messed around with the data that was available on USGS, Lidar Explorer, ESA's Sentinel, and OpenTopography.

Click here for more info

I'm slowly learning how to to use all of the GeoRef'd data that's freely available online.

My biggest achievement these past few days has been to be able to apply orthoimagery to point clouds and most recently DEMs too!

That was the whole point of BlenderRenderTerrain so I guess that makes that whole project almost obsolete.

These subprojects are the product of me messing around with this type of data.

For the most part, these are in chronological order.

Links to data sources

-

USGS's EarthExplorer - high resolution orthoimagery (up to 10cm resolution). [mainly US]

-

3DEP LidarExplorer - point clouds (up to 7pts/m^2) and digital elevation models (1m res). [US only]

-

ESA's Sentinel - near real time global coverage in 8 different wavelengths (IR to visible to UV). tons of derivative data provided too. [world]

-

IGN - french mapping agency. provides high res ortho (up to 10cm), 5m res DEM, vector maps, and much more. [France]

-

JAXA's GeoPortal - japanese mapping agency. covers everything from magnetometers to radar to IR to SAR. [mostly Asia-Pacific but world too]

-

USGS's Annex of PDS - USGS's colaboration with NASA for everything related to mapping data of other celestial bodies. [solar system except earth]

-

UAVSAR - part of NASA. uses airborne scanners to get polarimetric SAR. it's stunning what information they can extrapolate from that data seriously check it the tech is amazing. [select places in the world]

Click here to see sub-projects (click on most images and videos to see higher quality versions) (sometimes much, much larger)

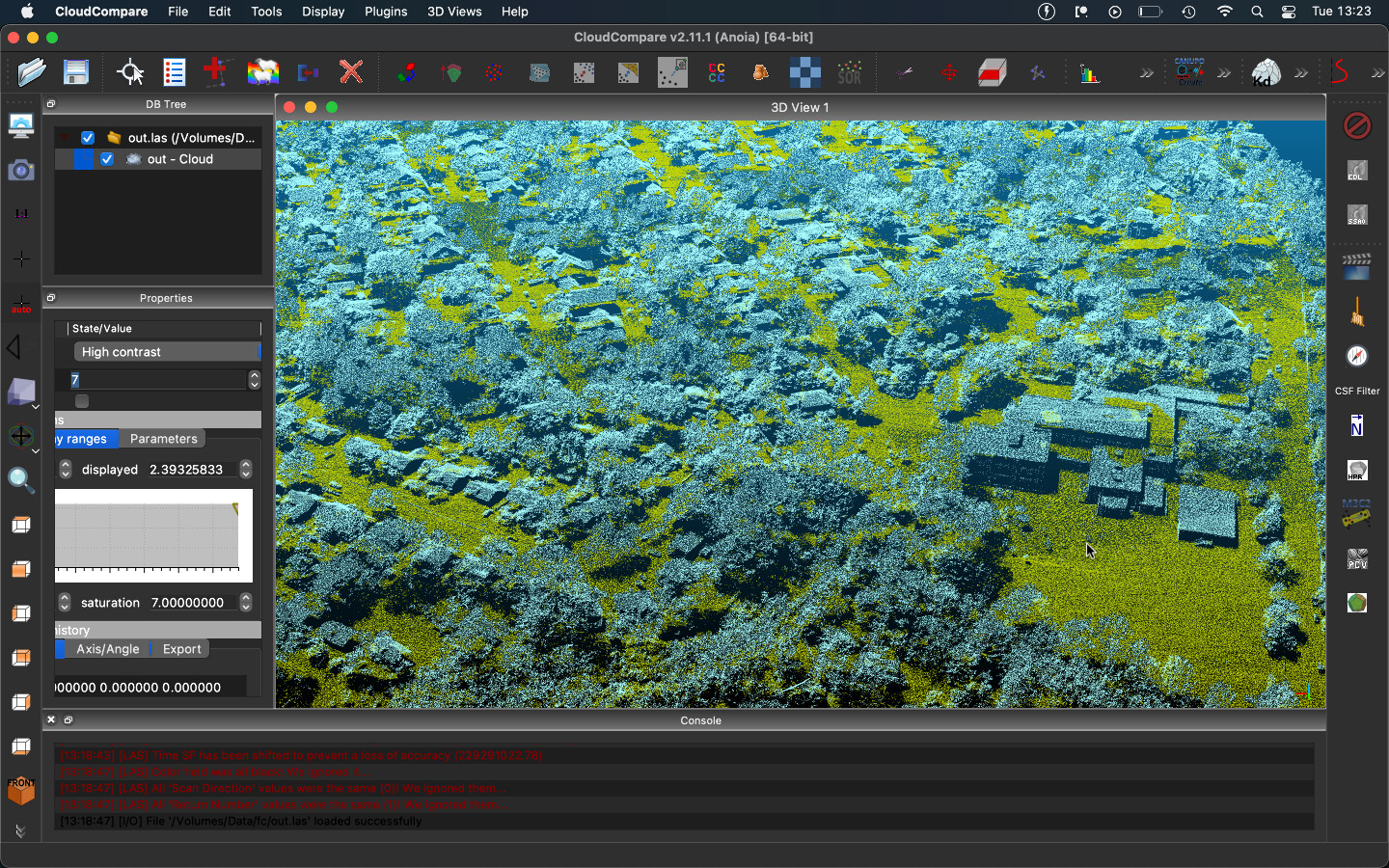

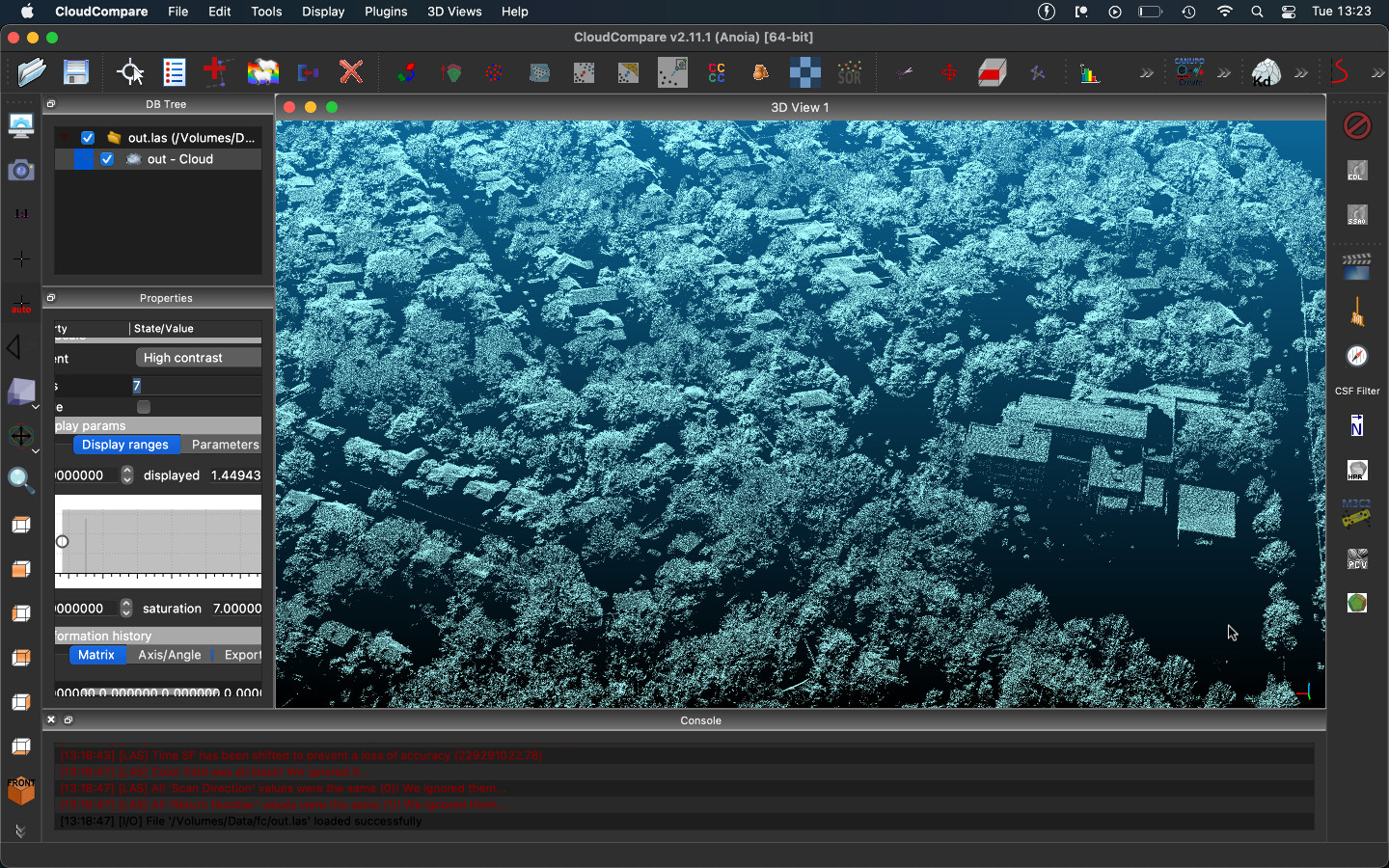

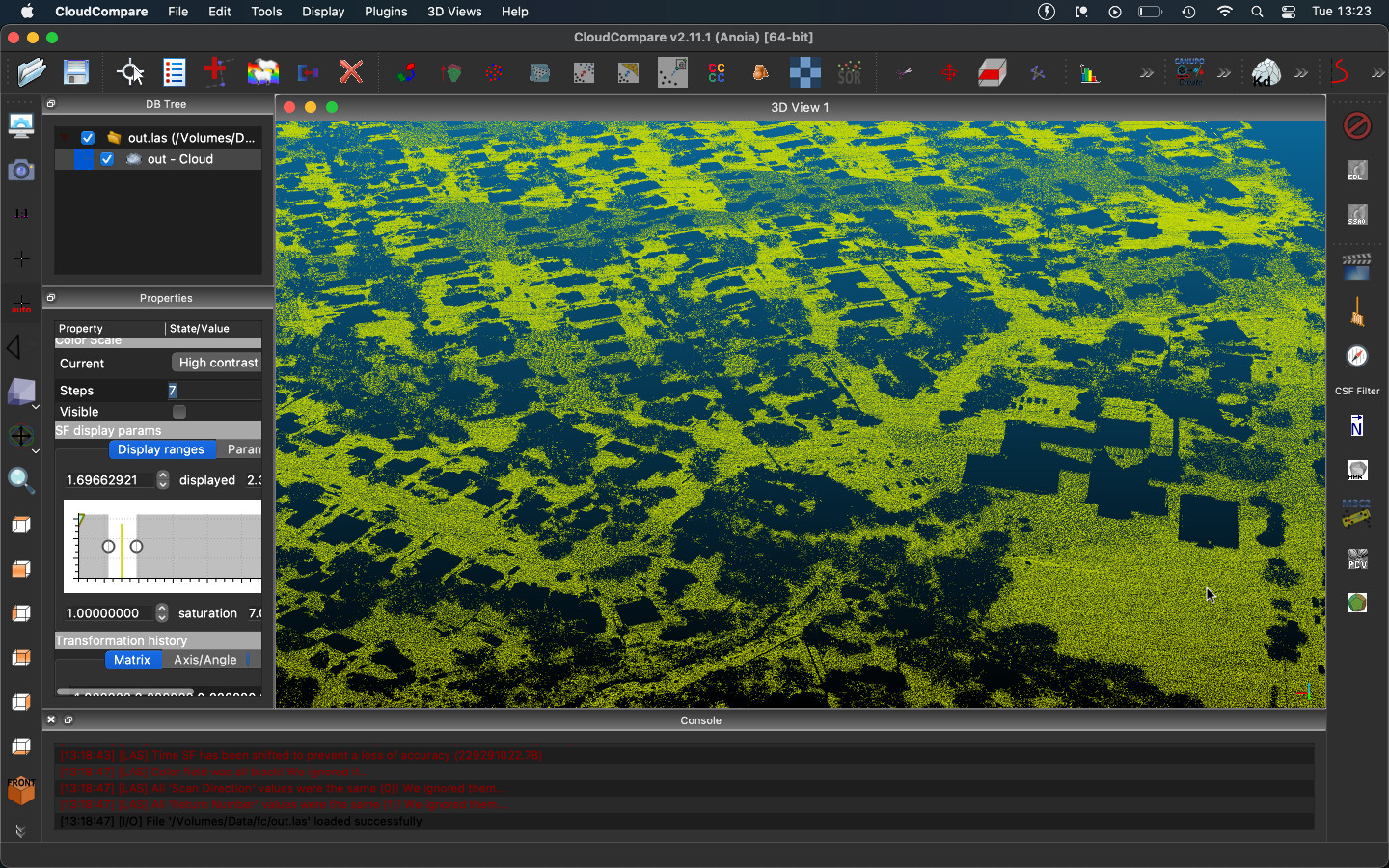

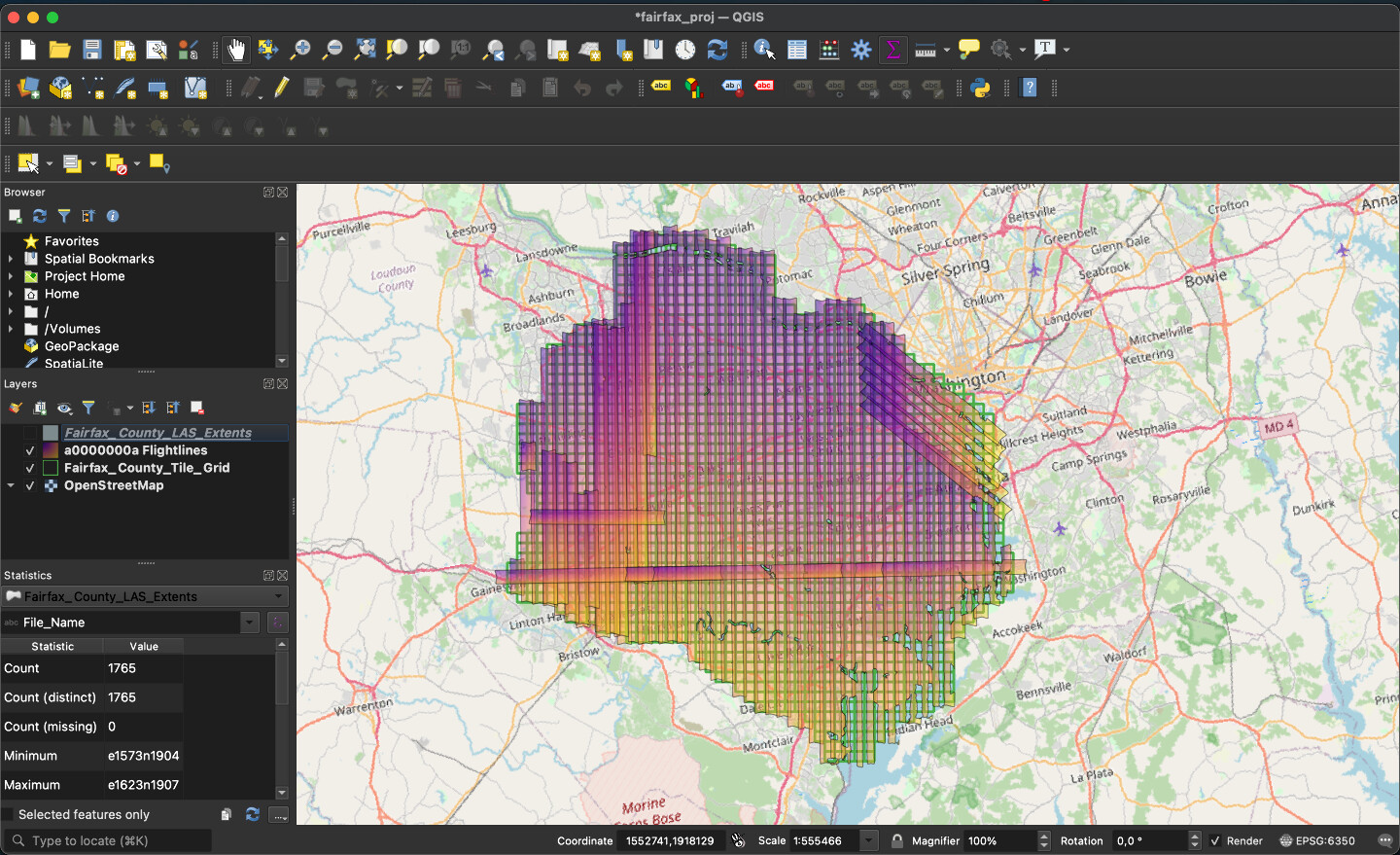

fc

-

Raw point cloud (fc.bin)

Download CloudCompare and drag and drop this .bin into the window to open (1.1G) -

-

-

-

-

-

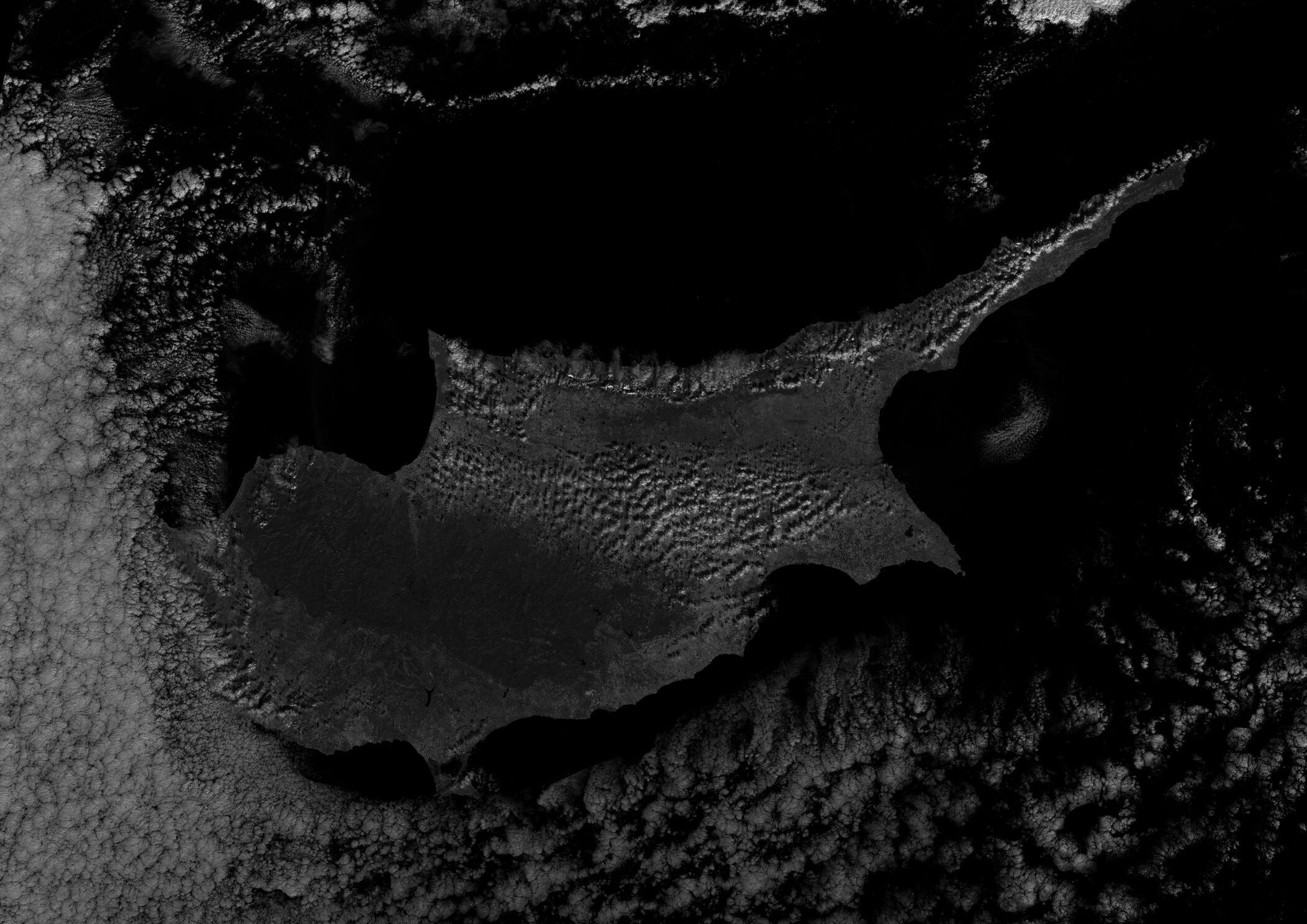

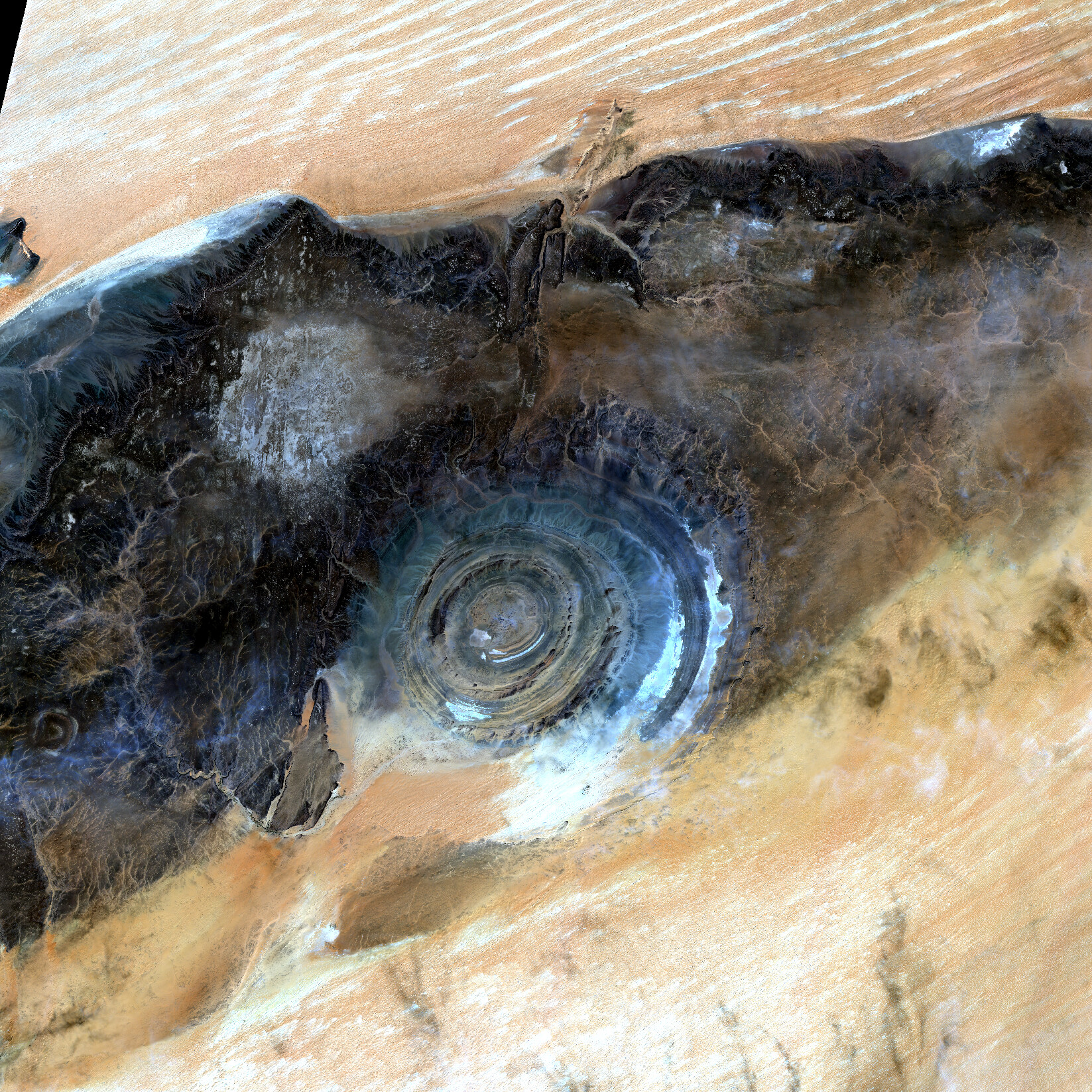

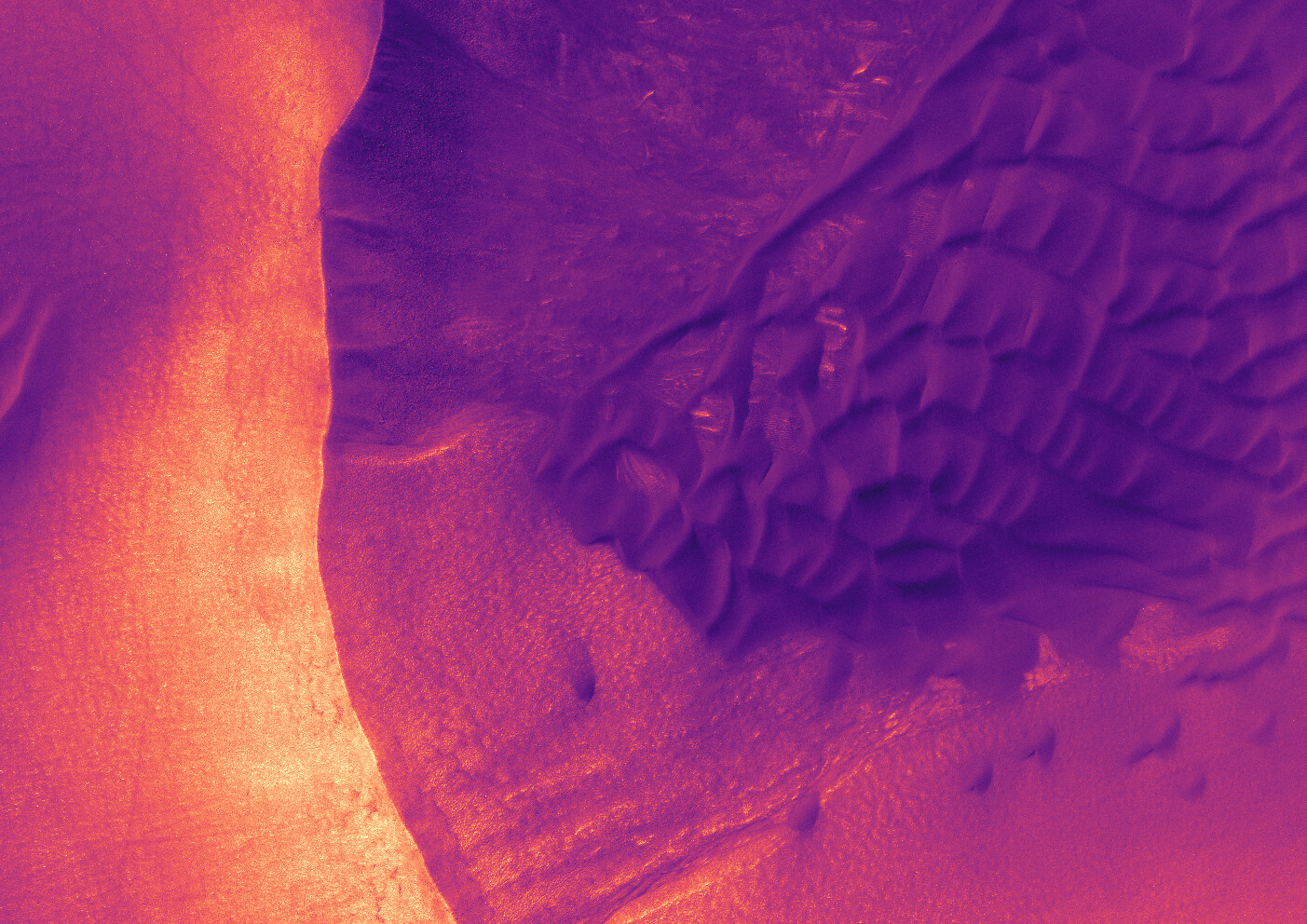

Various imagery gathered for space-borne satellites. All in optical range. Most earth shots are from the ESA. (Sentinel 2A and 2B satellites)

New Mexico (this was while I was first discovering how to apply georef'd orthoimagery to point clouds)

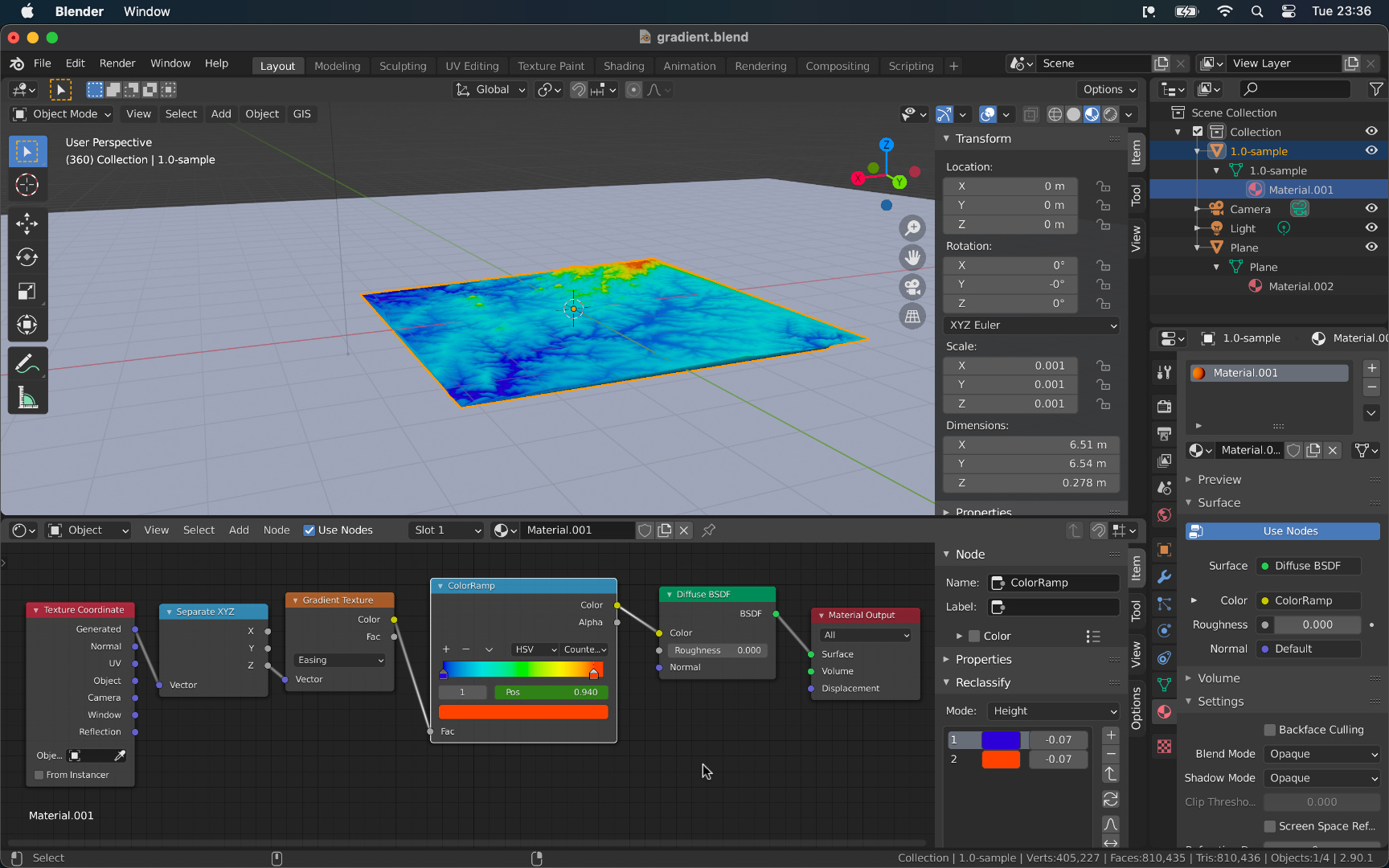

Streamlined the process from digital elevation model to a working Blender project with 3D terrain.

Click here for more info

Randomly came upon this page https://viewer.nationalmap.gov/basic/.

It's the main page you're supposed to use to access USGS (US Geographical Survey) datasets.

Turns out you can download DEM (Digital Elevation Model) tiles which are rectangular images where each pixel's value describes the elevation at that spot.

So I downloaded them for my region and started importing them into QGIS (software used for processing / analyzing / doing anything with geographical data).

I first found out about this software when I worked on ImThirsty.

So then with these tiles I started wondering what I could do with them. The first thing that came to mind was to try to convert these images into a 3D mesh I could import into Blender.

Many, many, trials later I finally found a piece of software called tin-terrain that lets you do exactly that using your choice between a few different algorithms (terra, zemlya, or a dense packing).

The problem was each of these tiles is 10000x10000 pixels (each pixel being 1m2). My laptop was not up to the task of converting a hundred million vertices into a mesh.

Trying to process the standard 10km x 10km tile would always lead to my laptop running out of memory. So I decided to use some of my free Google Cloud Compute credits to run the same program on a Ubuntu vm.

I kept starting tin-terrain but the vm would always run out of memory eventually. In the end I found ~30GB of ram would get the job done.

Rest will be written later lol (this is a WIP after all).

iOS app that allows you to locate the closest public toilets and drinking water fountains around you. Having to work on this was the entire reason I had to learn QGIS in the first place. I used to manually extract the amenities by tag from the OpenStreetMap planet file. Recently updated to use the Overpass API so it works anywhere in the world and with real time data. I'm working on creating downloadable add-ons that would allow you to store all of the toilets and water fountains for offline use anywhere in the world! (there are ~230000 water fountains and ~290000 public toilets indexed compressed down to just 5MB!) This could also work with any other amenities OSM tracks.

Click here to see demo video

Cohl

At some point while exploring surrealism I fell upon Émile Cohl's Le Cauchemar de Fantoche (1908).

I thought about writing a tweak for my phone that would randomly play a short clip from that film as my background whenever I would turn the screen on.

I never made it past compiling a couple of snippets so I've put them all in this gallery.

Click here to see gallery

Fashion

Was and still am somewhat interested in design and fashion.

Here is a little concept I made a while ago: concept-hoodie-final.pdf.

Also screen recordings of the making of: (youtube links in chronological order)

A (finally) working Flow (from BigDuckGames) solver. Implemented in java. Project is linked on my github right here ^.

You can find a non-working RustyFlow (Rust) attempt here.

Click here to see demo video

Click here to see gallery